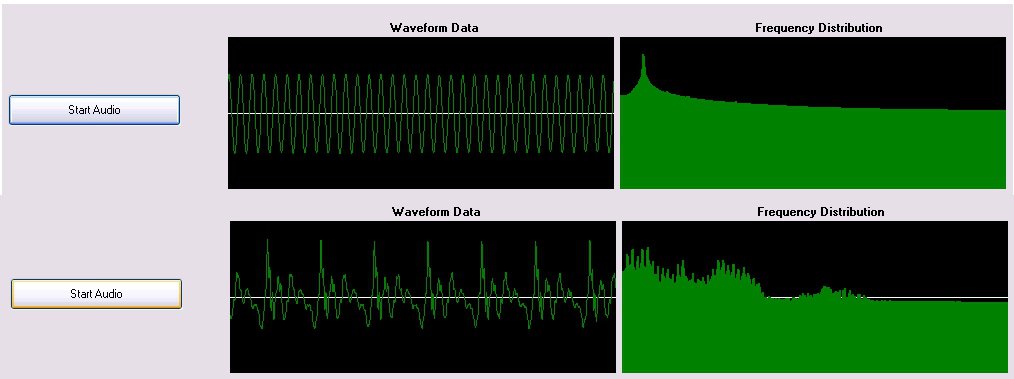

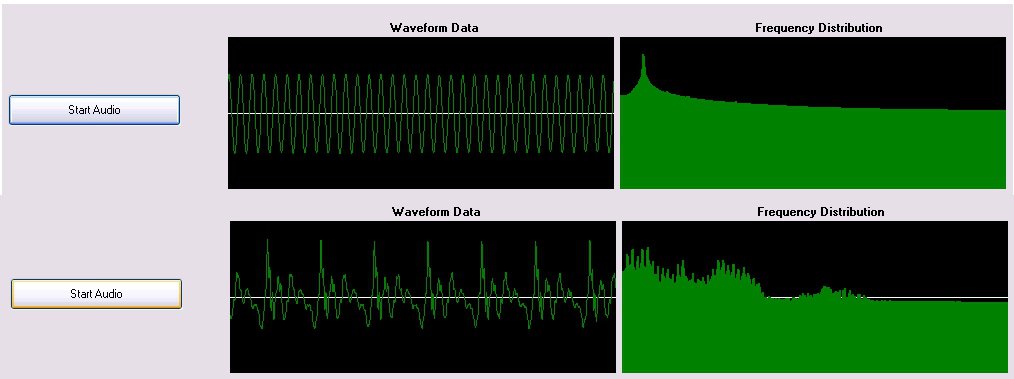

Before Bit can hear like humans do, I needed to make a “virtual cochlea” – a piece of software that would take auditory input from a microphone, convert it into frequencies (like the hairs in your cochlea do), and send the data into Bit’s peripheral nervous system. I got most of it done tonight – I made a real-time graphing system as well. On the left, you can see the waveform data coming off the microphone (top is a straight tone coming off my KORG, and bottom is me talking), and to the right is the data run through a Fast Fourier Transform to convert the time-based waveform data into a frequency based distribution. This can then be sent directly into a neural nucleus in Bit’s brain via the TCP nervous system.

Hello - and thanks for visiting my site! I maintain ToniWestbrook.com to share information and projects with others with a passion for applying computer science in creative ways. Let's make the world a better and more beautiful place through computing! | More about Toni »

Hello - and thanks for visiting my site! I maintain ToniWestbrook.com to share information and projects with others with a passion for applying computer science in creative ways. Let's make the world a better and more beautiful place through computing! | More about Toni »

Leave a Reply