Amazingly enough, I am a Star Trek fan (hard to believe, I know). When I was little, I would watch the original movies and cartoon series (I don’t think the original series was really airing anywhere where I was), and then once Star Trek: The Next Generation came out, I was completely hooked. TNG was the pinnacle for me – I watched DS9 and Voyager as well, but not to the religious degree that I followed the adventures of NCC-1701-D. As each series was canceled, I’d watch the movies when they came out (the new reboot was fantastic) – but there is definitely a piece of me that missed not having Star Trek regularly in my life. That is why I was so excited for the prospect of Trek’s newest game, Star Trek Online.

MMORPG: The Next Generation

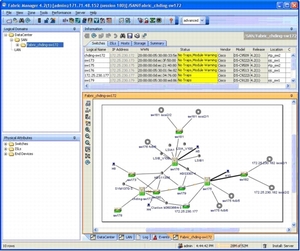

There have been numerous games to use the Star Trek IP in the past. What makes Star Trek Online different is its venture into the massively multiplayer world. Developed by Cryptic Studios (Champions Online), STO allows each player to captain their own starship in the Star Trek universe. This also includes getting to role play as one of the many races in Trek, such as Andorians, Trill, Vulcans, Klingons, etc (the list goes on quite a bit). From fast-paced space battles to a slew of away team missions, Cryptic has attempted to convert the full experience into a cooperative (and head-to-head) online environment. But did they succeed?

The Good

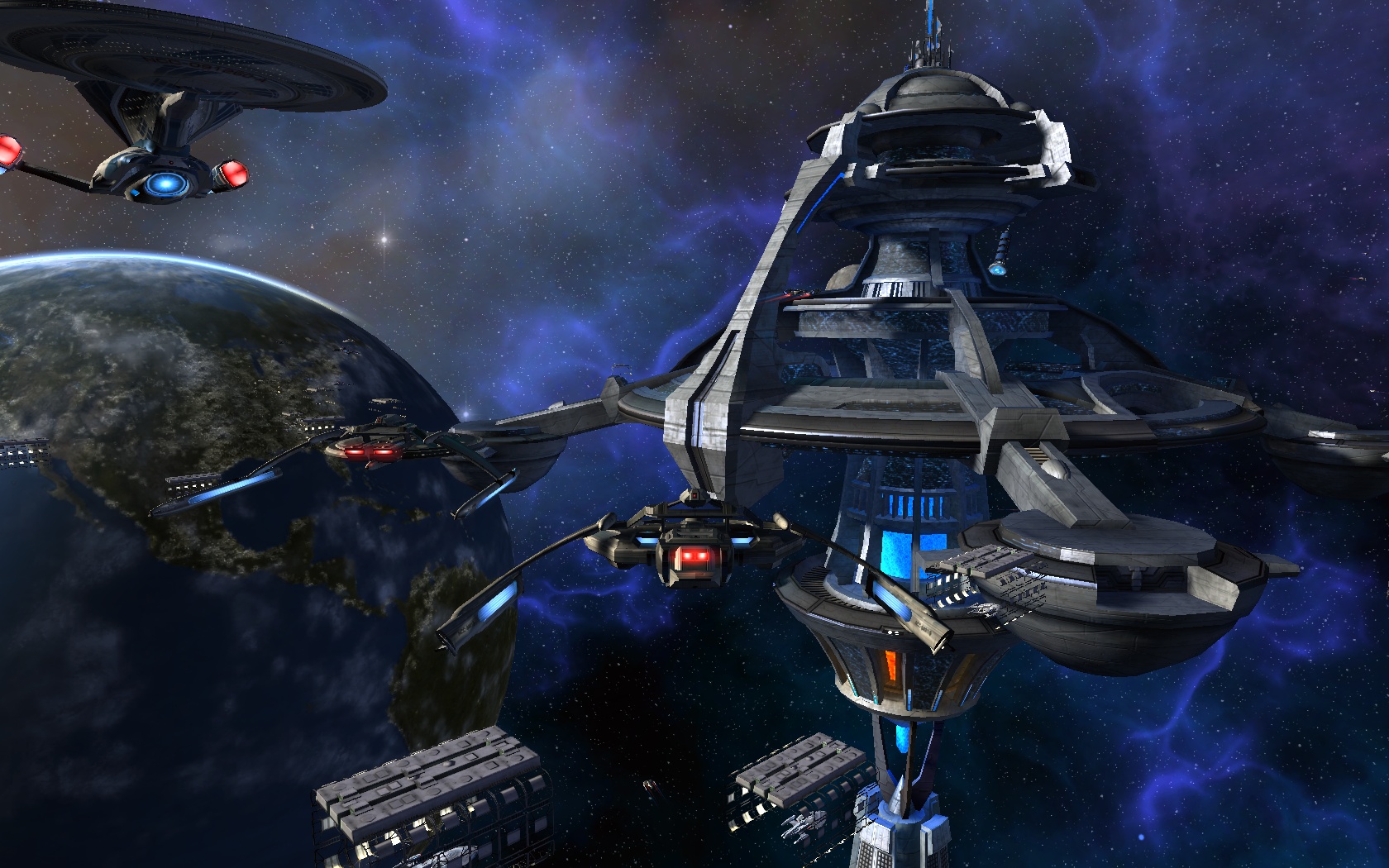

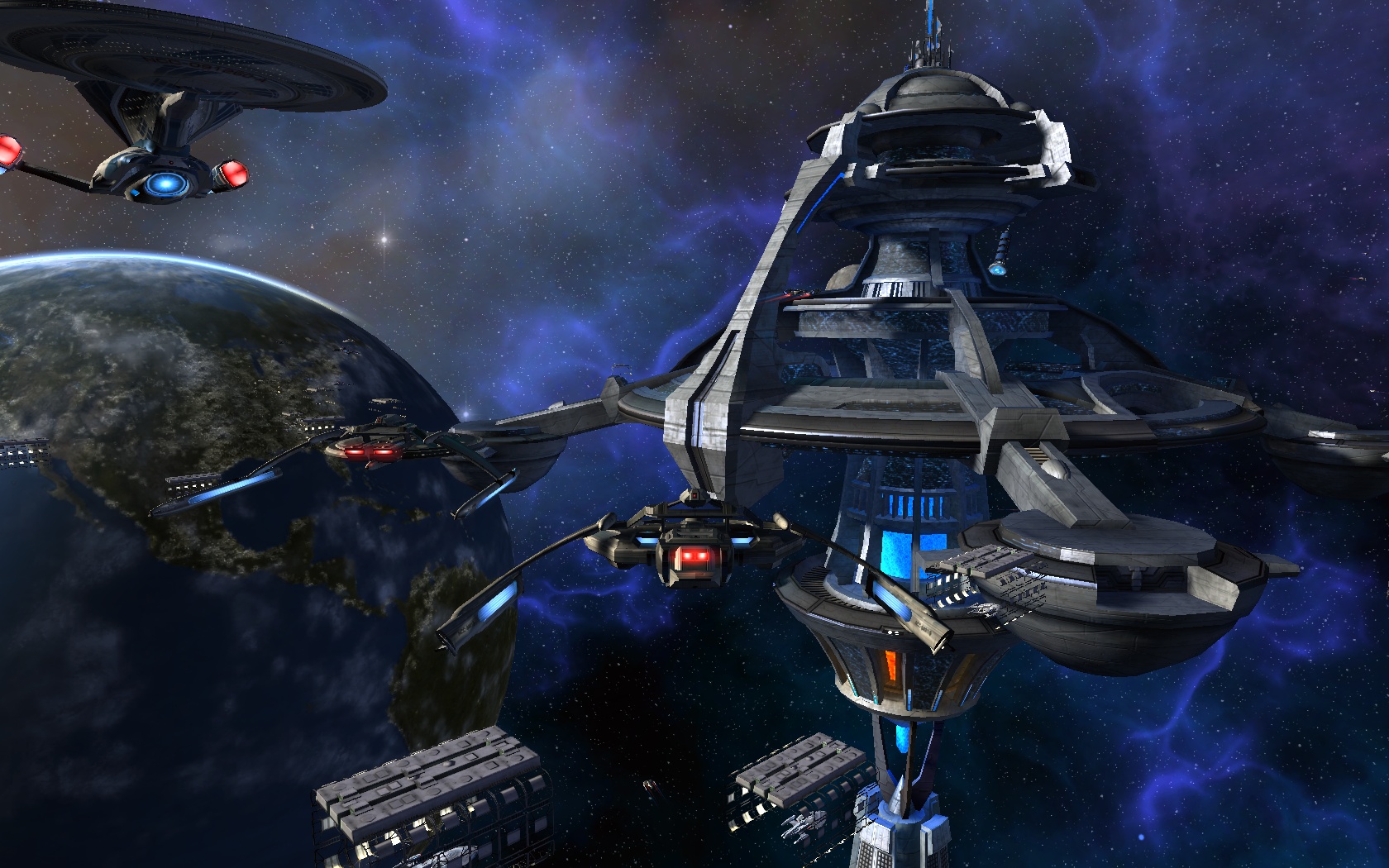

One of the first things you’ll notice when you start playing is how gorgeous the game is, especially in space. The development crew did a fantastic job with the recreating a wide variety of astronomical phenomena, making the game a real eye pleaser. I constantly find myself taking screenshot after screenshot as I encounter amazing looking locations.

For me, while fighting on away missions can be enjoyable, the dogfights in space are where the real fun is. By tailoring your and your bridge crew’s abilities, as well as modifying your starship with just the right armaments, you can create thousands of strategies for battle, customizing everything to your style of play. Specialize in engineering with a bridge crew trained for weapons modification and overpower the enemy, or become a science officer with a bridge crew trained for trapping and weakening your opponent, the sky (or space) is the limit.

For me, while fighting on away missions can be enjoyable, the dogfights in space are where the real fun is. By tailoring your and your bridge crew’s abilities, as well as modifying your starship with just the right armaments, you can create thousands of strategies for battle, customizing everything to your style of play. Specialize in engineering with a bridge crew trained for weapons modification and overpower the enemy, or become a science officer with a bridge crew trained for trapping and weakening your opponent, the sky (or space) is the limit.

The list goes on for where Cryptic got it right, but at the heart of it, I feel like they really captured Gene Roddenberry’s universe. All the major locations that will be familiar to any fan are present (Earth Spacedock, DS9, Memory Alpha, all the home planets). Each mission has details and back history to go along with the tasks at hand – which is highly suggest reading. You can blow through character dialog and just play for the action, but getting fully into what’s going on really adds to the immersion factor.

The Bad

For me, STO took a while to get into. After I did, I was completed addicted, but for the first 5 hours or so, I was playing more because it was a Star Trek game and I really wanted to give it a fair chance. They scale back the difficult to start, so it almost feels like you can’t die, which also made me question how fun the game was going to be.

Additionally, I wasn’t a fan of the away missions (and still have some reservations about the style of play, though I’m getting used to it). It’s a 3rd person view of your team, but actions are assigned as opposed to FPS style. You tell your character what action to take (shoot this enemy, create a shield generator, plant a bomb), but you don’t control how he shoots (e.g. a FPS). This makes ground combat also very strategy oriented as opposed to shoot-em-up. This still makes for an enjoyable and cerebral battle, but I almost feel like it would have been a better balance by keeping the strategy to space battles, and adding more fast-paced fun to away missions. Additionally, the AI for your bridge officers, while mostly good, can be atrocious sometimes, especially in the heat of battle when you don’t have a chance to manually configure their actions.

Additionally, I wasn’t a fan of the away missions (and still have some reservations about the style of play, though I’m getting used to it). It’s a 3rd person view of your team, but actions are assigned as opposed to FPS style. You tell your character what action to take (shoot this enemy, create a shield generator, plant a bomb), but you don’t control how he shoots (e.g. a FPS). This makes ground combat also very strategy oriented as opposed to shoot-em-up. This still makes for an enjoyable and cerebral battle, but I almost feel like it would have been a better balance by keeping the strategy to space battles, and adding more fast-paced fun to away missions. Additionally, the AI for your bridge officers, while mostly good, can be atrocious sometimes, especially in the heat of battle when you don’t have a chance to manually configure their actions.

The last major issue I see with the game is a lack of a community feel – it almost doesn’t feel like an MMORPG, more like a single player game where you occasionally team up with shipmates for space battles. I think this is due less to any shortcoming of design and more to the confines of doing a Star Trek MMO, in which there are thousands of planets separated by long space flights, as opposed to your typical MMO where you’ll constantly see everyone running around in a relatively small area with battles nearby and on the way to your destination. This almost isn’t that big of an issue with me, as I’m less of a social MMO player, which is why I never got too heavily into WoW – but I think for many people it will be an issue.

The Ugly

There are few major issues with this game, but being hot off the grill, it does have its slew of bugs that are being handled as they come up. I’ve found my ship trapped inside objects on a few occasions, to the point where I can’t move. I’ve also had strange UI issues, not being able to cleanly exit an area, mission issues, etc. But like I said, the game is brand new, and like any MMO, will take a while to refine. In addition, as time goes on, Cryptic will add more and more content, which I’m looking forward to as well.

Get Ready to Boldly Go

So whether you’re a Star Trek fan like me, or looking for a new MMORPG experience (or both! It’s not surprising many Star Trek fans are also video game players), give STO a try. With multiple pricing plans (monthly, yearly, lifetime, etc), you can give it a whirl for a while, and then make a bigger commitment if you find yourself hooked. Check it out at the official website: www.startrekonline.com.